LlamaIndex

Weave is designed to simplify the tracking and logging of all calls made through the LlamaIndex Python library. When working with LLMs, debugging is inevitable. Whether a model call fails, an output is misformatted, or nested model calls create confusion, pinpointing issues can be challenging. LlamaIndex applications often consist of multiple steps and LLM call invocations, making it crucial to understand the inner workings of your chains and agents. Weave simplifies this process by automatically capturing traces for your LlamaIndex applications. This enables you to monitor and analyze your application’s performance, making it easier to debug and optimize your LLM workflows. Weave also helps with your evaluation workflows.Getting Started

To get started, simply callweave.init() at the beginning of your script. The argument in weave.init() is a project name that will help you organize your traces.

Tracing

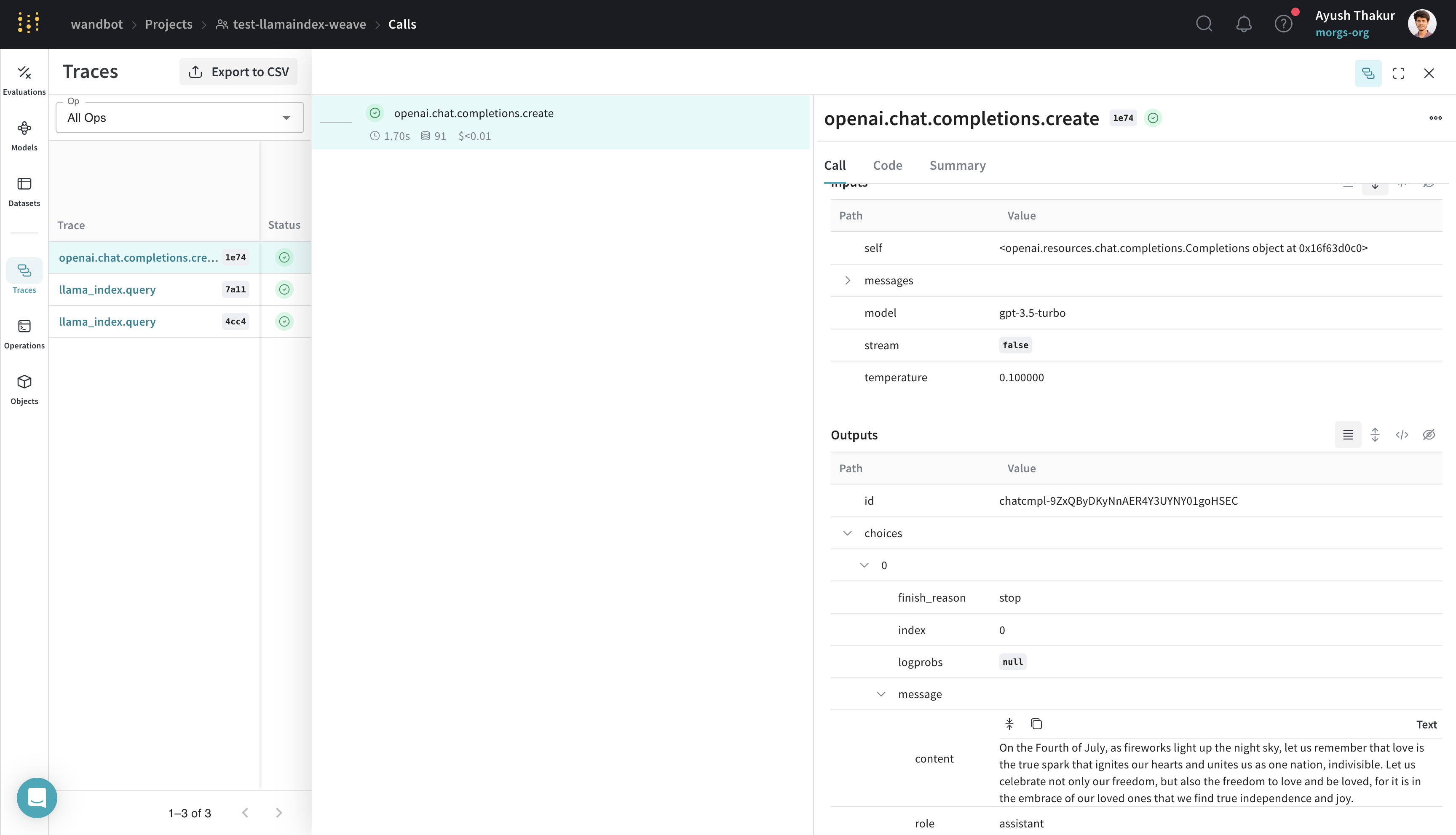

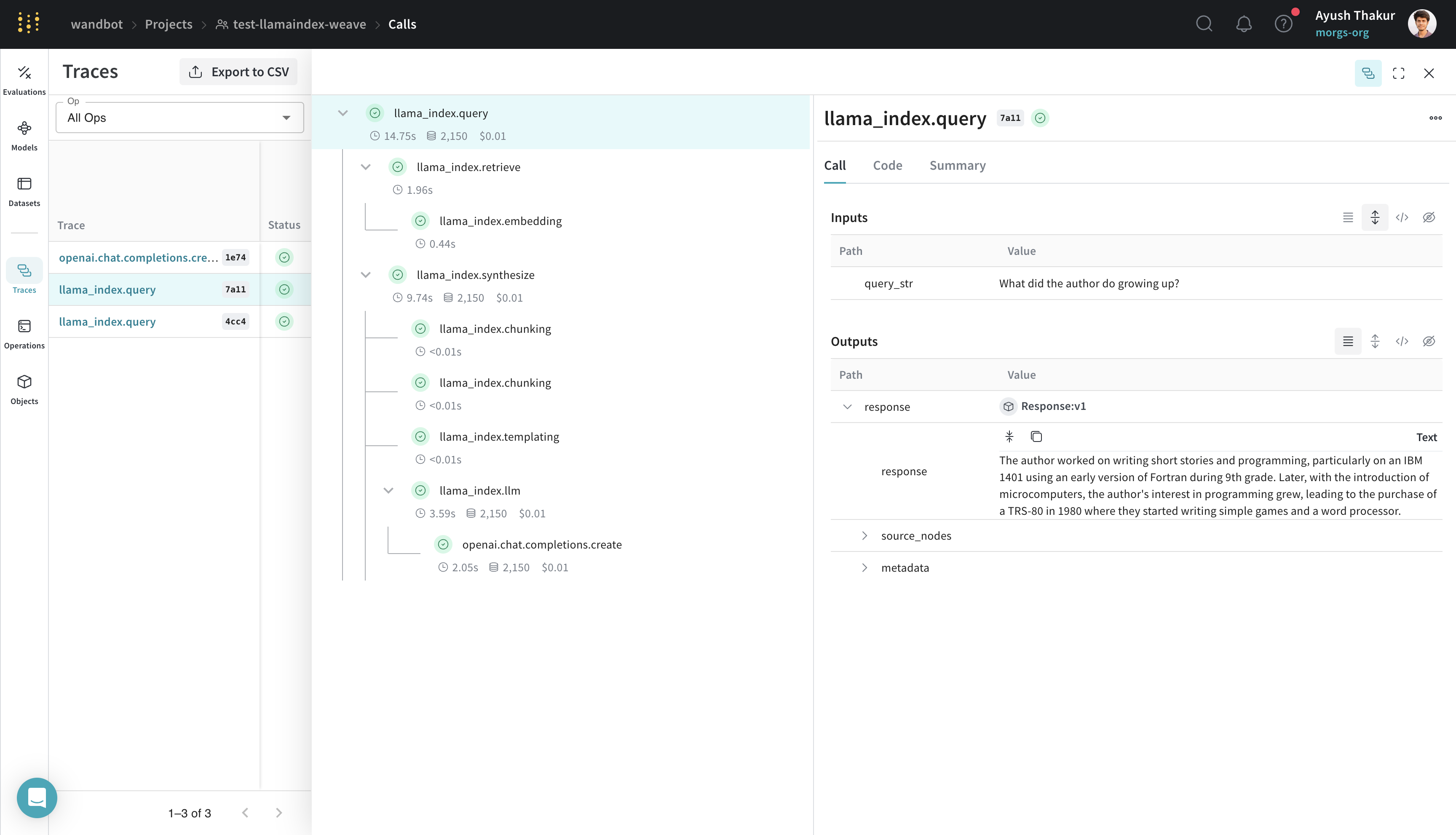

LlamaIndex is known for its ease of connecting data with LLM. A simple RAG application requires an embedding step, retrieval step and a response synthesis step. With the increasing complexity, it becomes important to store traces of individual steps in a central database during both development and production. These traces are essential for debugging and improving your application. Weave automatically tracks all calls made through the LlamaIndex library, including prompt templates, LLM calls, tools, and agent steps. You can view the traces in the Weave web interface. Below is an example of a simple RAG pipeline from LlamaIndex’s Starter Tutorial (OpenAI):

One-click observability 🔭

LlamaIndex provides one-click observability 🔭 to allow you to build principled LLM applications in a production setting. Our integration leverages this capability of LlamaIndex and automatically setsWeaveCallbackHandler() to llama_index.core.global_handler. Thus as a user of LlamaIndex and Weave all you need to do is initialize a Weave run - weave.init(<name-of-project>)

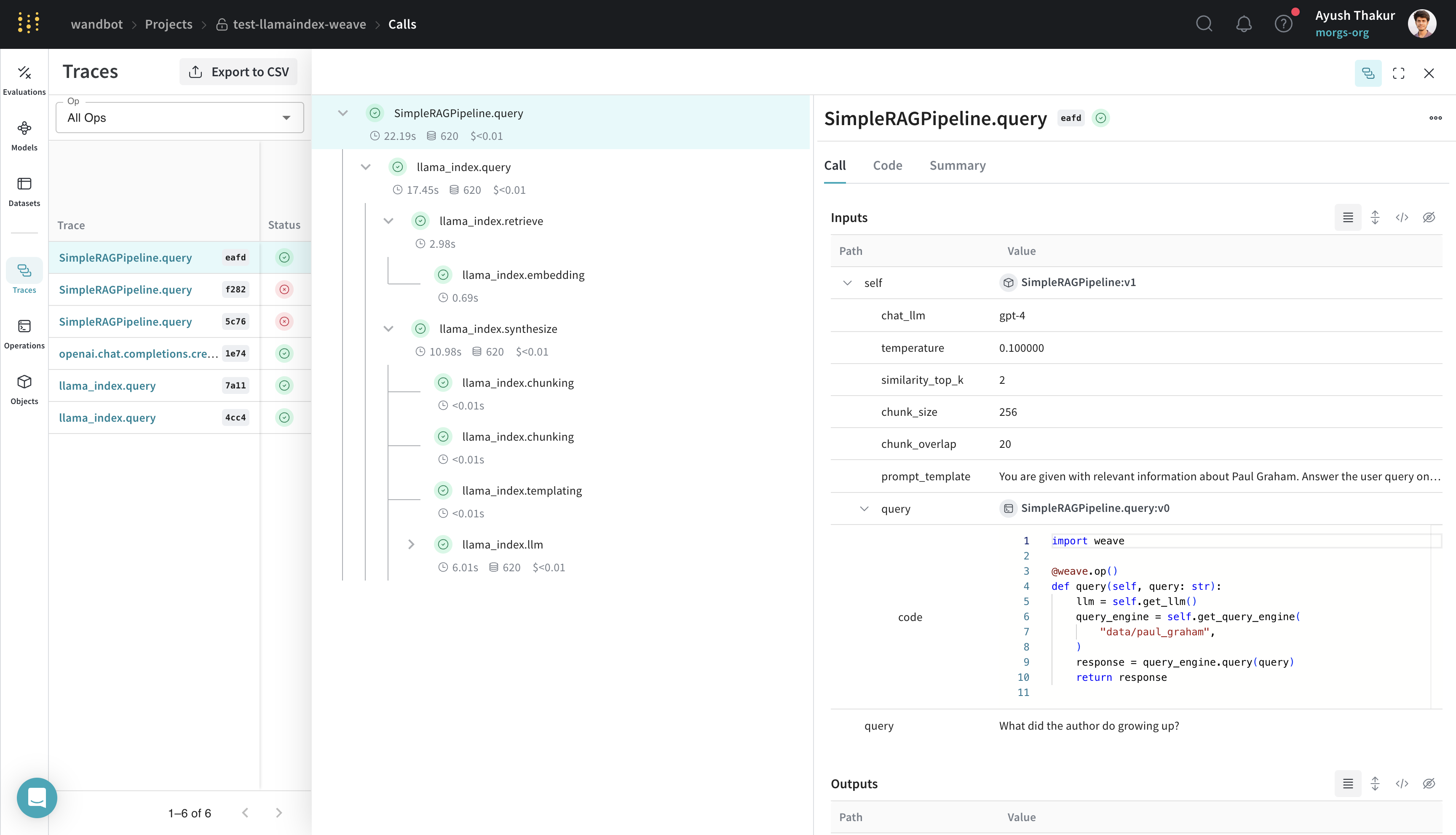

Create a Model for easier experimentation

Organizing and evaluating LLMs in applications for various use cases is challenging with multiple components, such as prompts, model configurations, and inference parameters. Using the weave.Model, you can capture and organize experimental details like system prompts or the models you use, making it easier to compare different iterations.

The following example demonstrates building a LlamaIndex query engine in a WeaveModel, using data that can be found in the weave/data folder:

SimpleRAGPipeline class subclassed from weave.Model organizes the important parameters for this RAG pipeline. Decorating the query method with weave.op() allows for tracing.

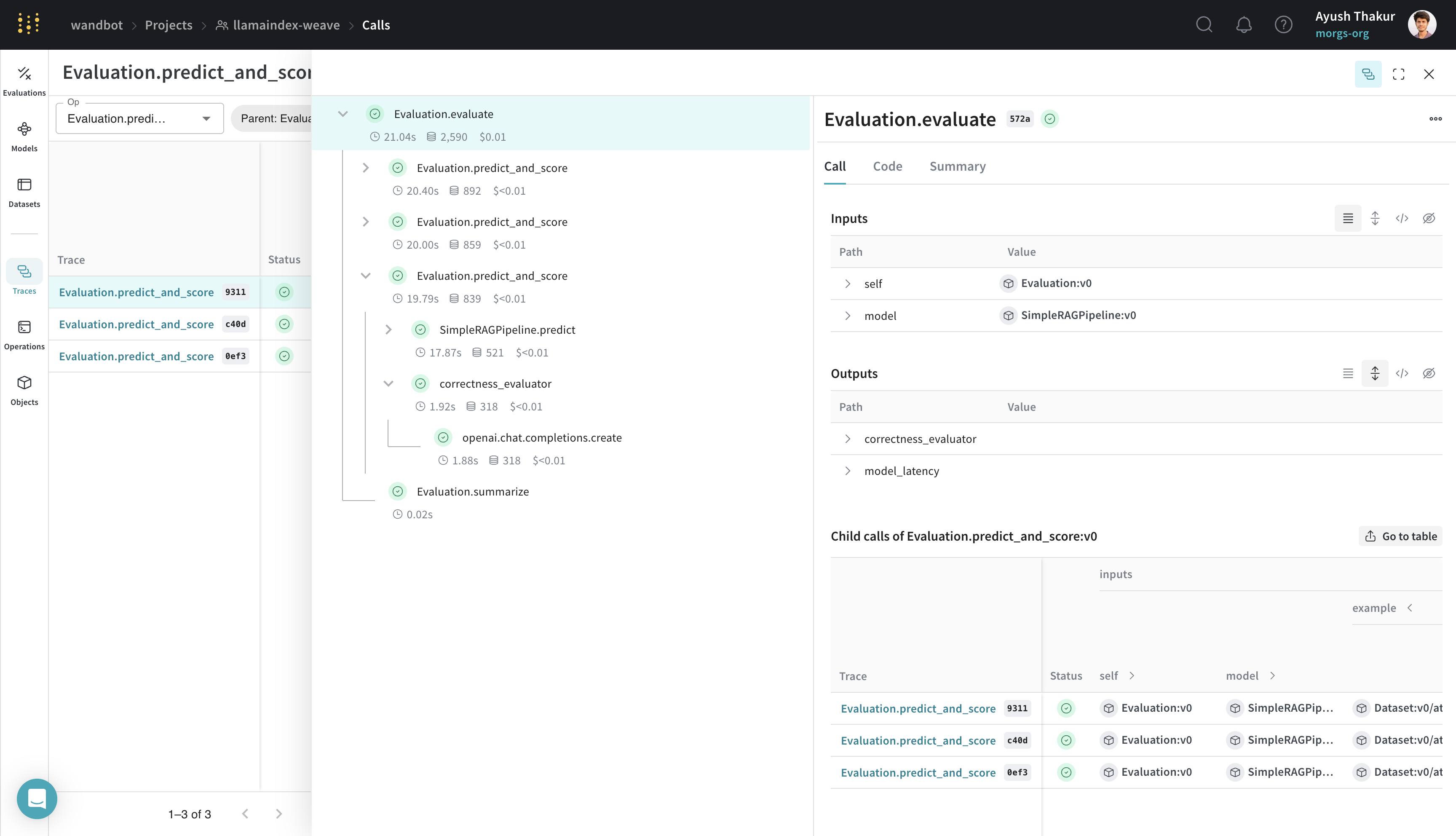

Doing Evaluation with weave.Evaluation

Evaluations help you measure the performance of your applications. By using the weave.Evaluation class, you can capture how well your model performs on specific tasks or datasets, making it easier to compare different models and iterations of your application. The following example demonstrates how to evaluate the model we created:

weave.Evaluation requires an evaluation dataset, a scorer function and a weave.Model. Here are a few nuances about the three key components:

- Make sure that the keys of the evaluation sample dicts matches the arguments of the scorer function and of the

weave.Model’spredictmethod. - The

weave.Modelshould have a method with the namepredictorinferorforward. Decorate this method withweave.op()for tracing. - The scorer function should be decorated with

weave.op()and should haveoutputas named argument.

By integrating Weave with LlamaIndex, you can ensure comprehensive logging and monitoring of your LLM applications, facilitating easier debugging and performance optimization using evaluation.

By integrating Weave with LlamaIndex, you can ensure comprehensive logging and monitoring of your LLM applications, facilitating easier debugging and performance optimization using evaluation.